Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed new tools to let soft robots better perceive what they’re interacting with.

“We wish to enable seeing the world by feeling the world,” said CSAIL director Daniela Rus, the Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science and the deputy dean of research for the MIT Stephen A. Schwarzman College of Computing.

“Soft robot hands have sensorised skins that allow them to pick up a range of objects, from delicate, such as potato chips, to heavy, such as milk bottles,”

To date, soft robots, which utilise squishy and flexible materials rather than rigid materials, have been limited due to lacking the ability to see and classify items, and a softer, delicate touch.

For example, a good robotic gripper needs to feel what it is touching (tactile sensing), and it needs to sense the positions of its fingers (proprioception).

However, such sensing has been missing from most soft robots, until now, wrote the researchers in a new pair of papers.

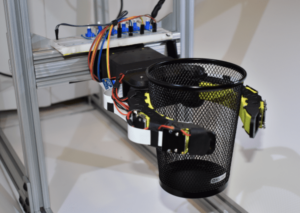

One paper builds off last year’s research from MIT and Harvard University, where a team developed a soft and strong robotic gripper in the form of a cone-shaped origami structure.

It collapses in on objects much like a Venus’ flytrap, to pick up items that are as much as 100 times its weight.

To achieve versatility and adaptability closer to that of a human hand, a new team came up with tactile sensors, made from latex ‘bladders’ (balloons) connected to pressure transducers.

The new sensors let the gripper not only pick up objects as delicate as crisps, but it also classifies them — letting the robot better understand what it’s picking up, while also exhibiting a light touch.

When classifying objects, the sensors correctly identified 10 objects with over 90% accuracy, even when an object slipped out of grip.

“Unlike many other soft tactile sensors, ours can be rapidly fabricated, retrofitted into grippers, and show sensitivity and reliability,” said MIT postdoc Josie Hughes, lead author on a new paper about the sensors.

“We hope they provide a new method of soft sensing that can be applied to a wide range of different applications in manufacturing settings, like packing and lifting.”

In a second paper, a group of researchers created a soft robotic finger called GelFlex that uses embedded cameras and deep learning to enable high-resolution tactile sensing and proprioception (awareness of positions and movements of the body).

The gripper, which looks resembles a two-finger cup gripper, uses a tendon-driven mechanism to actuate the fingers.

When tested on metal objects of various shapes, the system had over 96% recognition accuracy.

“Our soft finger can provide high accuracy on proprioception and accurately predict grasped objects, and also withstand considerable impact without harming the interacted environment and itself,” said Yu She, lead author on a new paper on GelFlex.

“By constraining soft fingers with a flexible exoskeleton, and performing high-resolution sensing with embedded cameras, we open up a large range of capabilities for soft manipulators.”

Read more from Robotics & Innovation

https://www.roboticsandautomationmagazine.co.uk/mit-computer-model-designs-and-controls-soft-robots/

https://www.roboticsandautomationmagazine.co.uk/researchers-create-new-metallic-material-for-flexible-soft-robots/