Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have unveiled a new robotic control system that enables machines to understand and control their own “bodies” using only visual input, eliminating the need for sensors or pre-programmed models.

The technique, called Neural Jacobian Fields (NJF), allows a robot to observe its own movements through a single camera and learn how different motor commands affect its body.

The system builds an internal model of the robot’s geometry and motion purely from visual data, paving the way for more flexible, affordable, and adaptive robotics.

Unlike conventional systems, which often rely on rigid components and sensor-heavy configurations, NJF enables control in robots that are soft, irregularly shaped, or lacking embedded sensors.

The model learns by watching the robot perform random movements, gradually associating visual changes with motor signals. Once trained, the robot can operate in real time using only one monocular camera.

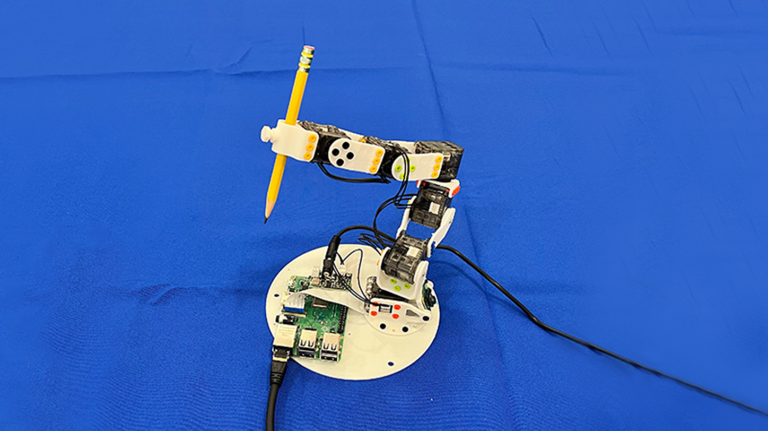

NJF’s capabilities have been tested across various robotic platforms, including a soft pneumatic hand, a 3D-printed robotic arm, a rigid Allegro hand, and a rotating platform without sensors.

In each instance, the system successfully learned how to control the device using visual feedback alone.

At the heart of the method is a neural network that captures two key elements: the three-dimensional structure of the robot and how parts of that structure respond to commands. Drawing on neural radiance fields (NeRF), a technique for reconstructing 3D scenes from images, NJF adds a new layer by learning a “Jacobian field”, which maps control inputs to physical motion.

This approach not only offers greater design flexibility for robot builders, but also reduces reliance on complex hardware and expensive infrastructure.

As the system does not require manual programming or detailed prior knowledge of the robot, it lowers the technical barrier for broader adoption of robotics.

Potential applications include agriculture, construction, and home robotics, environments where traditional control systems struggle due to unpredictability or limited access to precise localisation systems.

Currently, training requires multiple cameras and is specific to each individual robot, but researchers are exploring ways to simplify this, including using video captured on consumer devices.

Limitations remain, particularly in tasks requiring tactile feedback or generalisation across multiple robot types. However, future improvements aim to extend NJF’s capabilities to more complex and dynamic settings.

The work represents a collaboration between experts in computer vision and soft robotics at MIT.

The research was supported by the Solomon Buchsbaum Research Fund, the MIT Presidential Fellowship, the National Science Foundation, and the Gwangju Institute of Science and Technology. The team published their findings in the journal Nature on 25 June 2025.

Achievements and innovations in retail and e-commerce, healthcare and pharmaceuticals, food and beverage, automotive, transport & logistics, and more will be celebrated at the Robotics & Automation Awards on 29 October 2025 at De Vere Grand Connaught Rooms in London. Visit www.roboticsandautomationawards.co.uk to learn more about this unmissable event for the UK’s robotics and automation sectors!